Performance

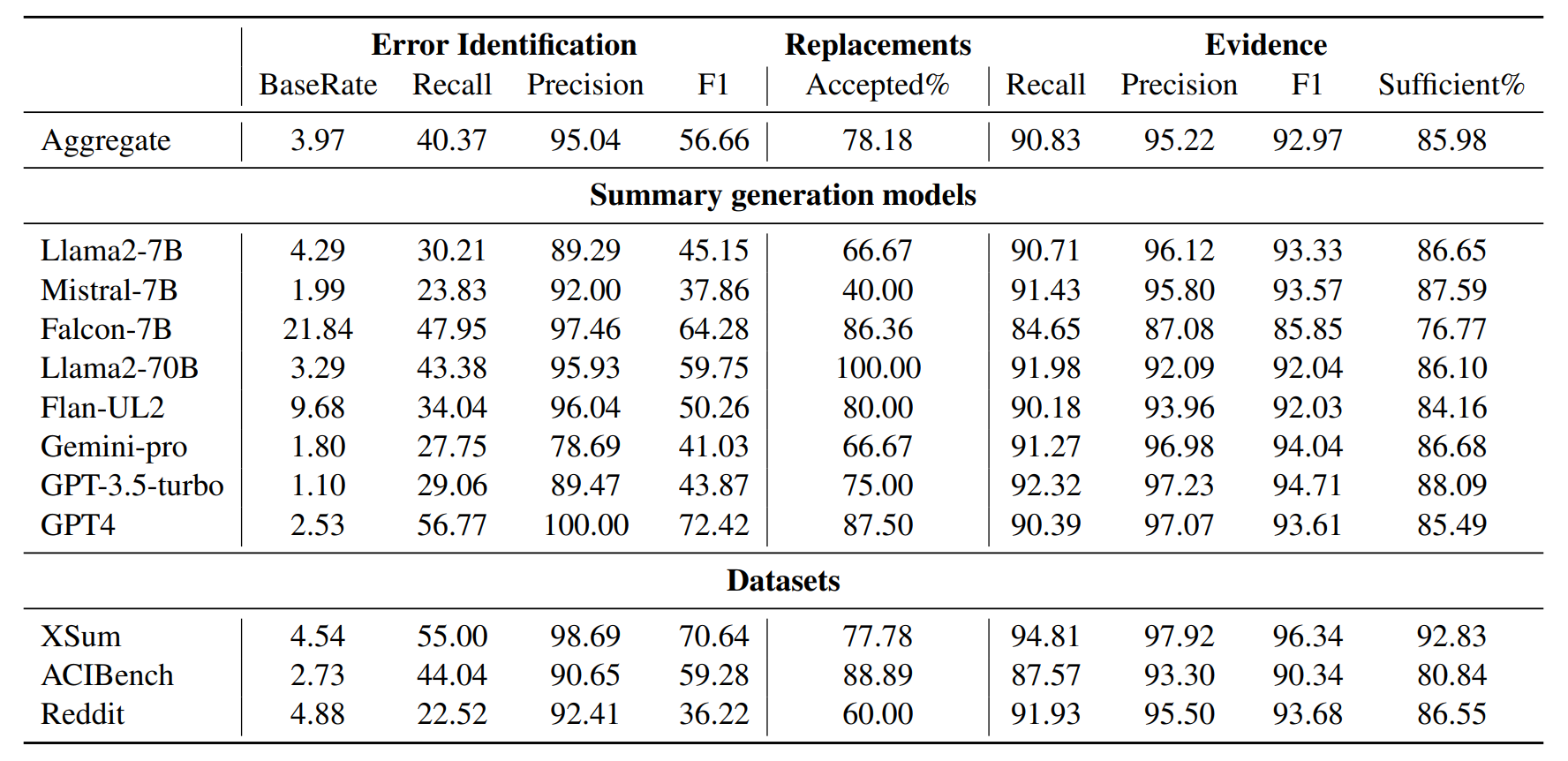

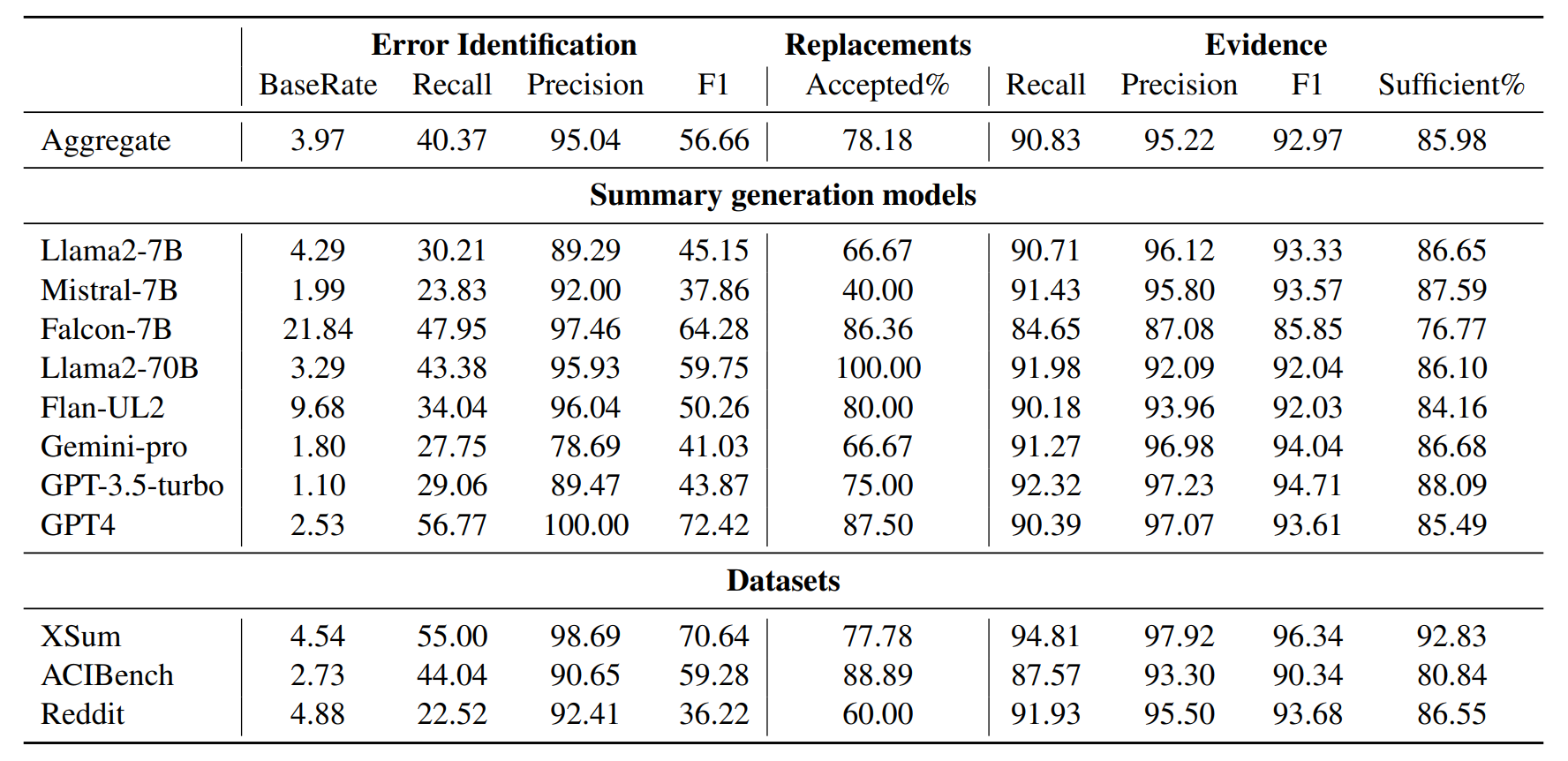

Results from human evaluation of GenAudit predictions (using fine-tuned Flan-UL2 backend) on LLM-generated summaries of documents from different datasets (please refer to paper for more details).

LLMs can generate factually incorrect statements even when provided access to reference documents. Such errors can be dangerous in high-stakes applications (e.g., document-grounded QA for healthcare or finance). We present GenAudit — a tool intended to assist fact-checking LLM responses for document-grounded tasks. GenAudit suggests edits to the LLM response by revising or removing claims that are not supported by the reference document, and also presents evidence from the reference for facts that do appear to have support. We train models to execute these tasks, and design an interactive interface to present suggested edits and evidence to users. Comprehensive evaluation by human raters shows that GenAudit can detect errors in 8 different LLM outputs when summarizing documents from diverse domains. We provide the tool and fact-checking models for public use.

GenAudit is available to install via PyPi. You can get it up and running with the following commands in the shell. You can spawn more than one fact-checking models in the backend if you have multiple GPUs, which can fact-check multiple sentences in parallel.

pip install genaudit

# if you have single gpu

python -m genaudit.launch --port <port-value> --qa-model hf:mistralai/Mistral-7B-Instruct-v0.1 \

--factcheck-model hf:kundank/genaudit-usb-flanul2 --num-factcheck-processes 1 \

--use-single-gpu --qa-quantize 4bit

# if you have N gpus to use

python -m genaudit.launch --port <port-value> --qa-model hf:mistralai/Mistral-7B-Instruct-v0.1 \

--factcheck-model hf:kundank/genaudit-usb-flanul2 --num-factcheck-processes <N-1> \

--qa-quantize 4bit

# using OpenAI models for QA (e.g. gpt-3.5-turbo)

python -m genaudit.launch --port <port-value> --qa-model oai:gpt-3.5-turbo-16k-0613 \

--factcheck-model hf:kundank/genaudit-usb-flanul2 --num-factcheck-processes 1 --use-single-gpu

We release a series of fine-tuned LLMs to work as the backend of the tool. They are of different sizes, and you can choose the optimal one depending on how much GPU memory you have and how much latency is acceptable. The model name can be passed via the --factcheck-model argument. The following models are available on huggingface now:

from genaudit import FactChecker

fc = FactChecker("hf:kundank/genaudit-usb-flanul2")

ref = '''Carnegie Mellon University (CMU) is a private research university in Pittsburgh, Pennsylvania. \

The institution was formed by a merger of Carnegie Institute of Technology and Mellon Institute \

of Industrial Research in 1967. In the 1990s and into the 2000s, Carnegie Mellon solidified its \

status among American universities, consistently ranking in the top 25. In 2018, Carnegie Mellon's \

Tepper School of Business placed 12th in an annual ranking of U.S. business schools by Bloomberg Businessweek.'''

gen = "CMU is a top-ranked university located in Pittsburgh. It was formed by merging Carnegie Institute \

of Technology, Mellon Institute of Industrial Research, and the Cranberry Lemon Institute. Its business school \

was ranked 15th in the US by Bloomberg Businessweek."

fc.check(reference=ref, claim=gen)

######################

####### Output #######

######################

{'reference_sents': ['Carnegie Mellon University (CMU) is a private research university in Pittsburgh, Pennsylvania.',

'The institution was formed by a merger of Carnegie Institute of Technology and Mellon Institute of Industrial Research in 1967.',

'In the 1990s and into the 2000s, Carnegie Mellon solidified its status among American universities, consistently ranking in the top 25.',

"In 2018, Carnegie Mellon's Tepper School of Business placed 12th in an annual ranking of U.S. business schools by Bloomberg Businessweek."],

'claim_sents': [{'evidence_labels': [0, 2],

'todelete_spans': [],

'replacement_strings': [],

'txt': 'CMU is a top-ranked university located in Pittsburgh.',

'success': True,

'edited_txt': 'CMU is a top-ranked university located in Pittsburgh.'},

{'evidence_labels': [1],

'todelete_spans': [[57, 58], [98, 133]],

'replacement_strings': [' and', ''],

'txt': 'It was formed by merging Carnegie Institute of Technology, Mellon Institute of Industrial Research, and the Cranberry Lemon Institute.',

'success': True,

'edited_txt': 'It was formed by merging Carnegie Institute of Technology and Mellon Institute of Industrial Research.'},

{'evidence_labels': [3],

'todelete_spans': [[30, 35]],

'replacement_strings': [' 12th'],

'txt': 'Its business school was ranked 15th in the US by Bloomberg Businessweek.',

'success': True,

'edited_txt': 'Its business school was ranked 12th in the US by Bloomberg Businessweek.'}]}

Results from human evaluation of GenAudit predictions (using fine-tuned Flan-UL2 backend) on LLM-generated summaries of documents from different datasets (please refer to paper for more details).

@article{krishna2024genaudit,

title={GenAudit: Fixing Factual Errors in Language Model Outputs with Evidence},

author={Krishna, Kundan and Ramprasad, Sanjana and Gupta, Prakhar and Wallace, Byron C and Lipton, Zachary C and Bigham, Jeffrey P},

journal={arXiv preprint arXiv:2402.12566},

year={2024}

}